Varnish Cache

A quick reference guide for all things Varnish!

- Getting Started with Varnish Cache

- Understanding VCL

- Caching Strategies with Varnish

- Extending Varnish Cache capabilities

- Securing Varnish Cache

- Load Balancing and High Availability

- Varnish Cache and SSL/TLS

- Monitoring and Metrics

- Cache Invalidation

- Ecommerce Websites

- What Content to Cache

- Integrating with a CDN

Getting Started with Varnish Cache

An introduction to Varnish Cache, its benefits, and how to install and configure it.

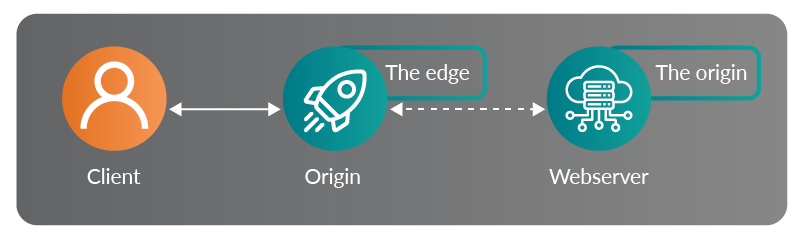

Varnish Cache is a powerful open-source HTTP accelerator (reverse proxy for caching HTTP) designed to significantly improve website performance and reduce server load by caching and serving frequently accessed content. It acts as an intermediary between the client and the backend web server, efficiently storing copies of web pages and assets like images, CSS, and JavaScript in its high-speed memory.

The primary benefit of Varnish Cache lies in its ability to dramatically speed up website response times, leading to a better user experience. By serving cached content directly from memory, Varnish reduces the need for repeated processing of dynamic content on the backend, resulting in faster page loads and reduced server resource utilization.

To install Varnish Cache, you’ll need to ensure your server meets the system requirements and has necessary dependencies like GCC and the PCRE library. Once ready, the installation process involves using package managers like YUM or APT to download and install the software. Alternatively, you can compile Varnish from source code for more customization options.

After installation, configuration is key to getting the best out of Varnish Cache. It involves setting up a Varnish Configuration Language (VCL) file, which defines how Varnish should handle incoming requests and what to cache. Careful consideration should be given to handling cookies, cache invalidation strategies, and the caching of personalized content.

When properly installed and configured, Varnish Cache can significantly improve website performance, reduce server load, and handle sudden traffic spikes more efficiently. As a result, businesses can enjoy better user retention, improved search engine rankings, and increased overall customer satisfaction. Varnish Cache has become an essential tool for websites dealing with high traffic volumes, making it an invaluable asset for developers seeking to optimize web performance.

The biggest advantages of Varnish Cache are its speed and flexibility. It can speed up content delivery by 300-1000x, and thanks to the flexibility of Varnish Configuration Language (VCL), it can be configured to act as a load balancer, and block IP addresses. This combination of speed and configurability has led to Varnish Cache growing in popularity over Nginx, another reverse proxy used for caching that has been around longer.

Understanding VCL (Varnish Configuration Language)

A deep dive into Varnish’s powerful configuration language, including examples of common use cases and best practices for writing efficient VCL code.

Understanding Varnish Configuration Language (VCL) is essential for harnessing the full potential of Varnish Cache. VCL is a domain-specific language used to define how Varnish handles incoming HTTP requests and decides what content to cache. It consists of various subroutines that are executed during different stages of request processing.

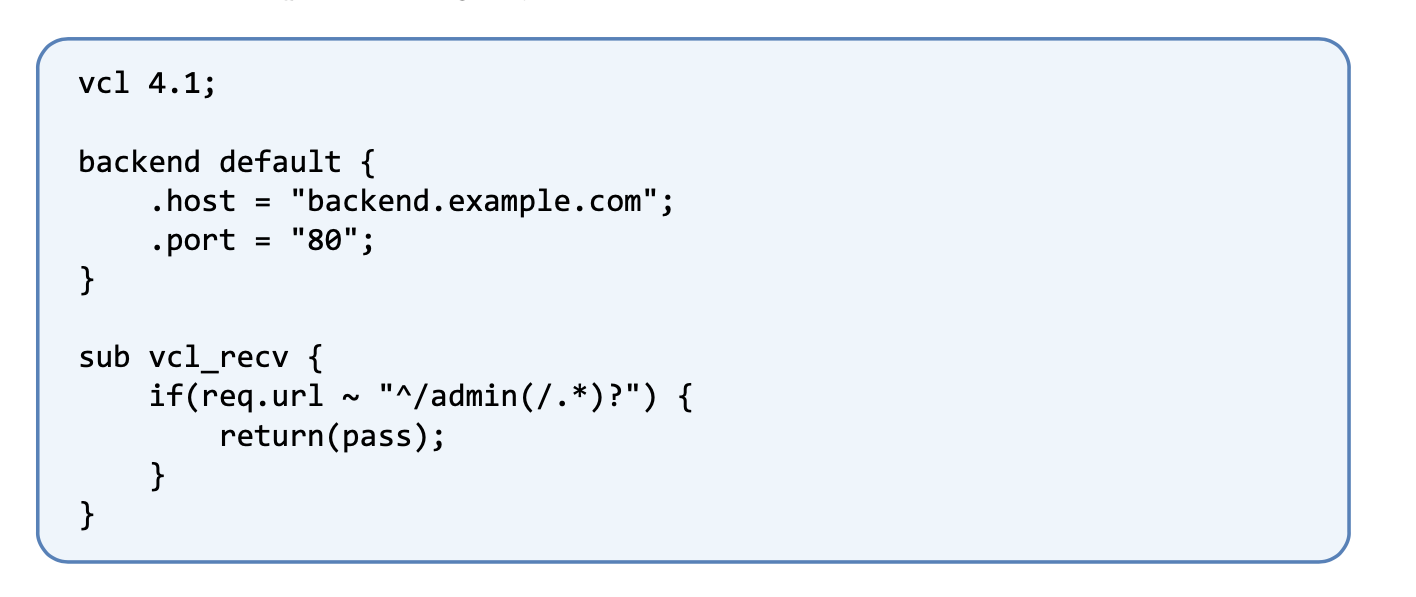

Here’s some sample code to give you an idea of what VCL looks like:

One of the key subroutines is “vcl_recv,” which is responsible for processing incoming requests before they are fetched from the backend. This subroutine allows developers to make decisions based on request headers, cookies, or client IP addresses. Another crucial subroutine is “vcl_fetch,” executed after fetching content from the backend but before storing it in the cache. Here, developers can manipulate the fetched content or define cache policies.

A common use case for VCL is cache control based on HTTP headers. Developers can use the “vcl_recv” subroutine to inspect headers like “Cache-Control” and decide whether to cache the content or pass it to the backend for fresh data. Additionally, VCL enables edge-side includes (ESI), where dynamic and static content can be assembled in real-time, optimizing cache efficiency.

Writing efficient VCL code involves several best practices. Minimizing conditional statements, using “return” to stop further processing when appropriate, and leveraging the built-in “std” library for common tasks are some key practices. Properly handling cache invalidation, especially for dynamic content, is crucial to avoid stale data issues.

Mastering VCL and its subroutines empowers developers to finely control Varnish Cache’s behavior, leading to optimal cache performance and improved website responsiveness. Efficient VCL code not only ensures better caching decisions but also contributes to overall web server resource optimization.

Caching Strategies with Varnish

Explore various caching strategies, such as edge-side includes (ESI), cache purging, and cache invalidation techniques to optimize content delivery and improve performance.

Varnish Cache offers a range of caching strategies that can significantly optimize content delivery and enhance website performance. Three key strategies include Edge-Side Includes (ESI), Cache Purging, and Cache Invalidation techniques.

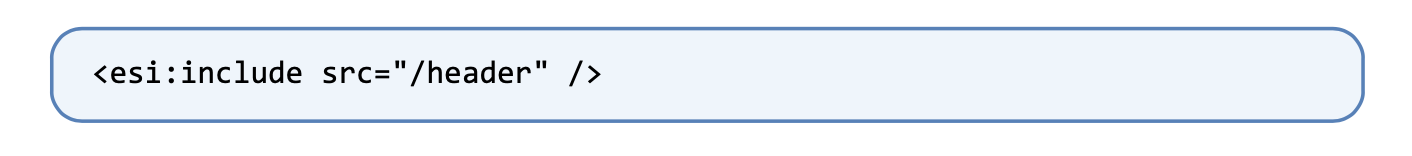

Edge-Side Includes (ESI) is a powerful strategy that allows developers to split web pages into multiple fragments or components. These fragments can be cached separately, and when a request is made, Varnish can dynamically assemble the page by combining the cached fragments with real-time, non-cacheable content from the origin server. ESI is particularly useful for websites with dynamic and personalized elements, as it enables efficient caching of static components while ensuring the freshness of personalized content.

ESI tag

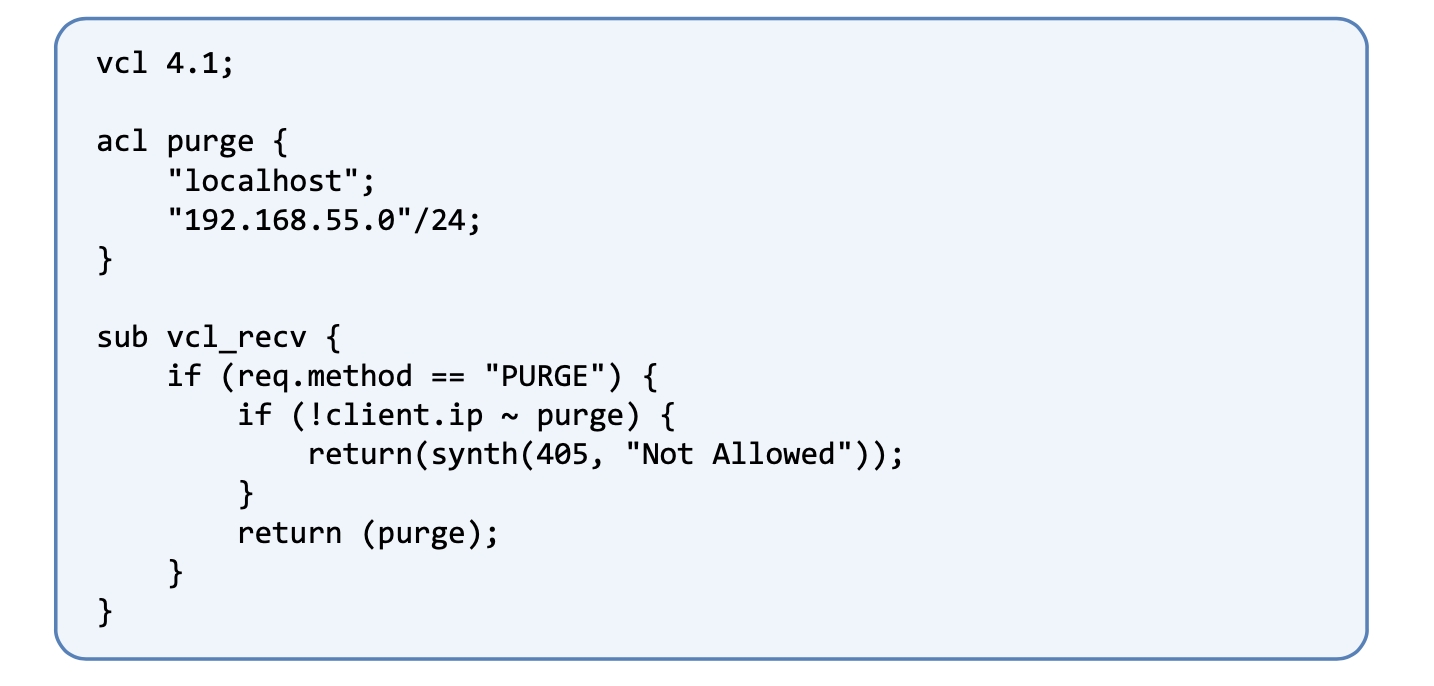

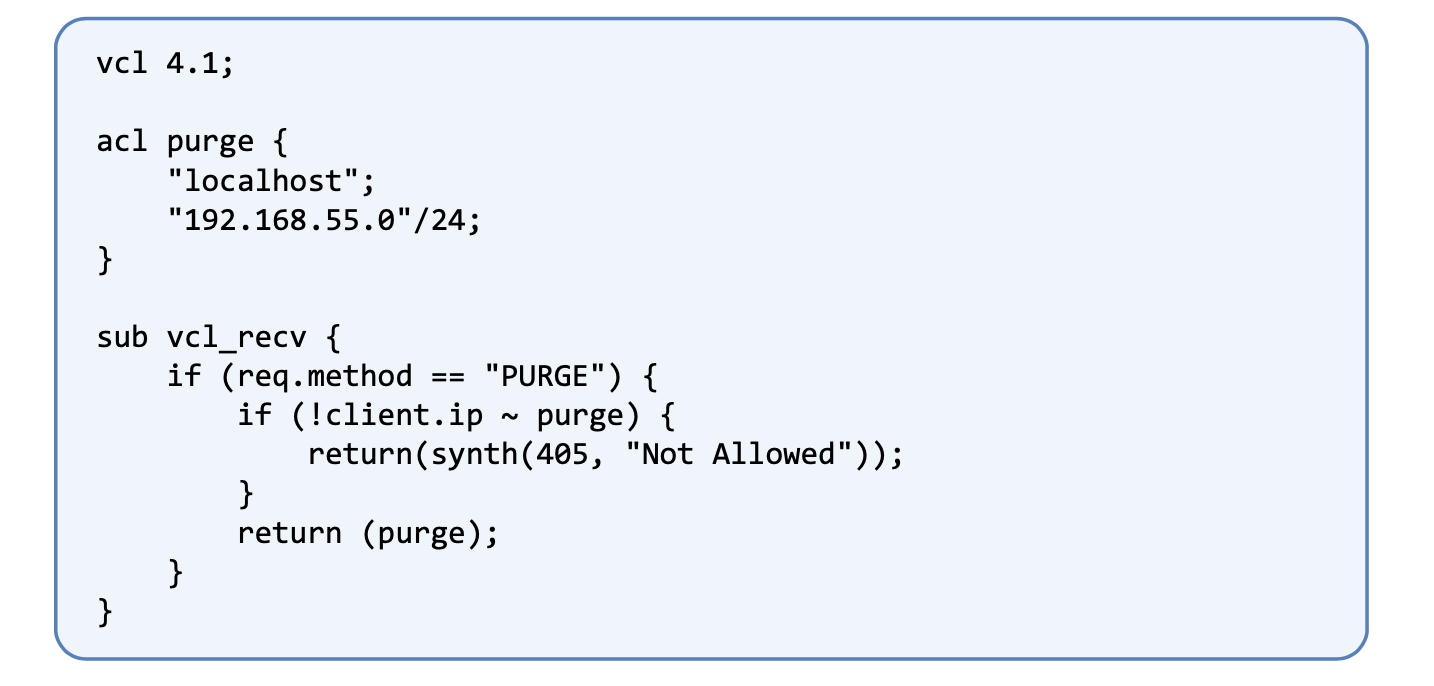

Cache Purging is the process of selectively removing specific cached items to update the cache with fresh content. Varnish provides mechanisms to purge individual URLs or specific objects, allowing developers to invalidate specific parts of the cache when content is updated or becomes stale. By strategically purging outdated content, Varnish ensures that users receive the most up-to-date information, striking a balance between cache efficiency and content accuracy.

Purge code

Cache Invalidation techniques are essential for ensuring that cached content remains relevant. Varnish provides several options, such as time-based expiration, conditional requests with “If-Modified-Since” or “ETag” headers, and cache invalidation through backend control. These techniques help maintain cache freshness and avoid serving outdated or irrelevant content to users.

By adopting these caching strategies, developers can leverage Varnish Cache’s capabilities to optimize content delivery, reduce server load, and provide users with faster and more responsive websites. However, it’s crucial to strike a balance between aggressive caching for performance benefits and proper cache invalidation strategies to ensure users receive accurate and up-to-date information.

Extending Varnish Cache capabilities with VMODs

Add custom functionality and enhance cache performance with Varnish Modules

Extending Varnish Cache capabilities with VMODs (Varnish Modules) is a powerful way to add custom functionality and enhance cache performance for specific use cases. VMODs are dynamic loadable modules written in C or other supported languages, allowing developers to introduce new functionalities without modifying the Varnish source code.

VCL snippet that features the cookie VMOD

With VMODs, developers can extend Varnish to interact with external systems, perform complex data manipulations, or implement custom caching strategies. For example, VMODs can be created to integrate Varnish with specific databases, implement advanced cache invalidation techniques, or add support for specialized authentication methods.

VMODs enable Varnish to adapt to unique application requirements, improving the overall efficiency and effectiveness of the cache. By encapsulating custom functionalities in VMODs, developers can easily reuse and share these modules across different projects.

The Varnish community actively contributes to the VMOD ecosystem, resulting in a wide range of pre-built modules available for various purposes. These community-contributed VMODs can be readily incorporated into Varnish setups to address common challenges and optimize performance.

Leveraging VMODs extends the power of Varnish Cache, making it a flexible and customizable caching solution for diverse web application scenarios. By tailoring Varnish with VMODs, developers can unleash the full potential of caching while maintaining the ease and efficiency of Varnish’s configuration and deployment.

Securing Varnish Cache and Handling Attacks

How to secure Varnish Cache from DDoS attacks, SQL injections, and brute-force attempts.

Securing Varnish Cache against common web application attacks is crucial to protect both the cache infrastructure and the backend servers from potential vulnerabilities. Here are some essential steps to bolster Varnish Cache’s security:

Rate Limiting and DDoS Protection: Implement rate-limiting mechanisms to restrict the number of requests from a single IP address or user agent within a specified timeframe. Utilize DDoS protection solutions and services to detect and mitigate distributed denial-of-service attacks, safeguarding Varnish from being overwhelmed with malicious traffic.

Input Validation and Sanitization: Enforce strict input validation and data sanitization practices at the backend to prevent SQL injection attacks. Apply parameterized queries and prepared statements to mitigate the risk of malicious code injection.

HTTP Request Filtering: Set up filters to block requests containing suspicious patterns, malicious user-agents, or known attack signatures. Utilize Varnish VCL to inspect and block requests that may pose security risks.

SSL/TLS Encryption: Use SSL/TLS encryption to secure communications between clients and Varnish Cache. This ensures that sensitive data transmitted through HTTP is encrypted and protected from eavesdropping.

Authentication and Authorization: Implement strong authentication mechanisms for Varnish’s administrative interfaces. Use passwords, IP whitelisting, or other authentication methods to restrict access to authorized personnel only.

Monitoring and Logging: Regularly monitor Varnish Cache and backend server logs to identify potential security threats or suspicious activities. Use security information and event management (SIEM) tools to aggregate and analyze log data for early threat detection.

Regular Updates and Patching: Keep Varnish Cache and its dependencies up-to-date with the latest security patches and updates. Regularly check for security advisories and apply fixes promptly to mitigate known vulnerabilities.

By proactively applying these security measures, developers can significantly enhance Varnish Cache’s resilience against DDoS attacks, SQL injections, and brute-force attempts, ensuring a robust and secure caching infrastructure for their web applications.

Load Balancing and High Availability with Varnish

Setting up Varnish Cache in a load-balanced and highly available environment to ensure optimal performance and reliability.

Load balancing and high availability are critical for ensuring optimal performance and reliability of Varnish Cache in high-traffic environments. Setting up Varnish in a load-balanced and highly available configuration involves distributing the incoming requests across multiple Varnish Cache instances and ensuring seamless failover in case of any server downtime.

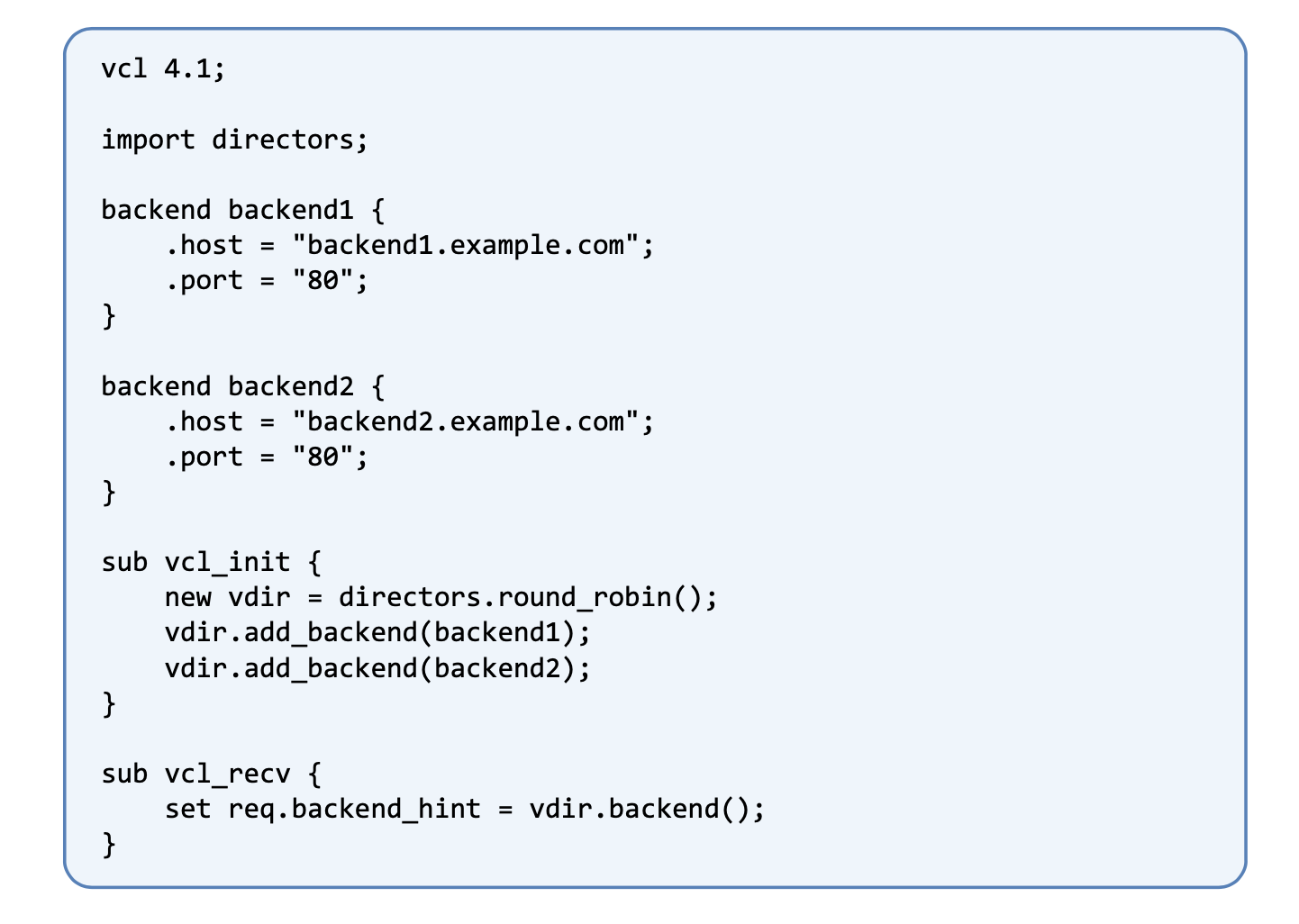

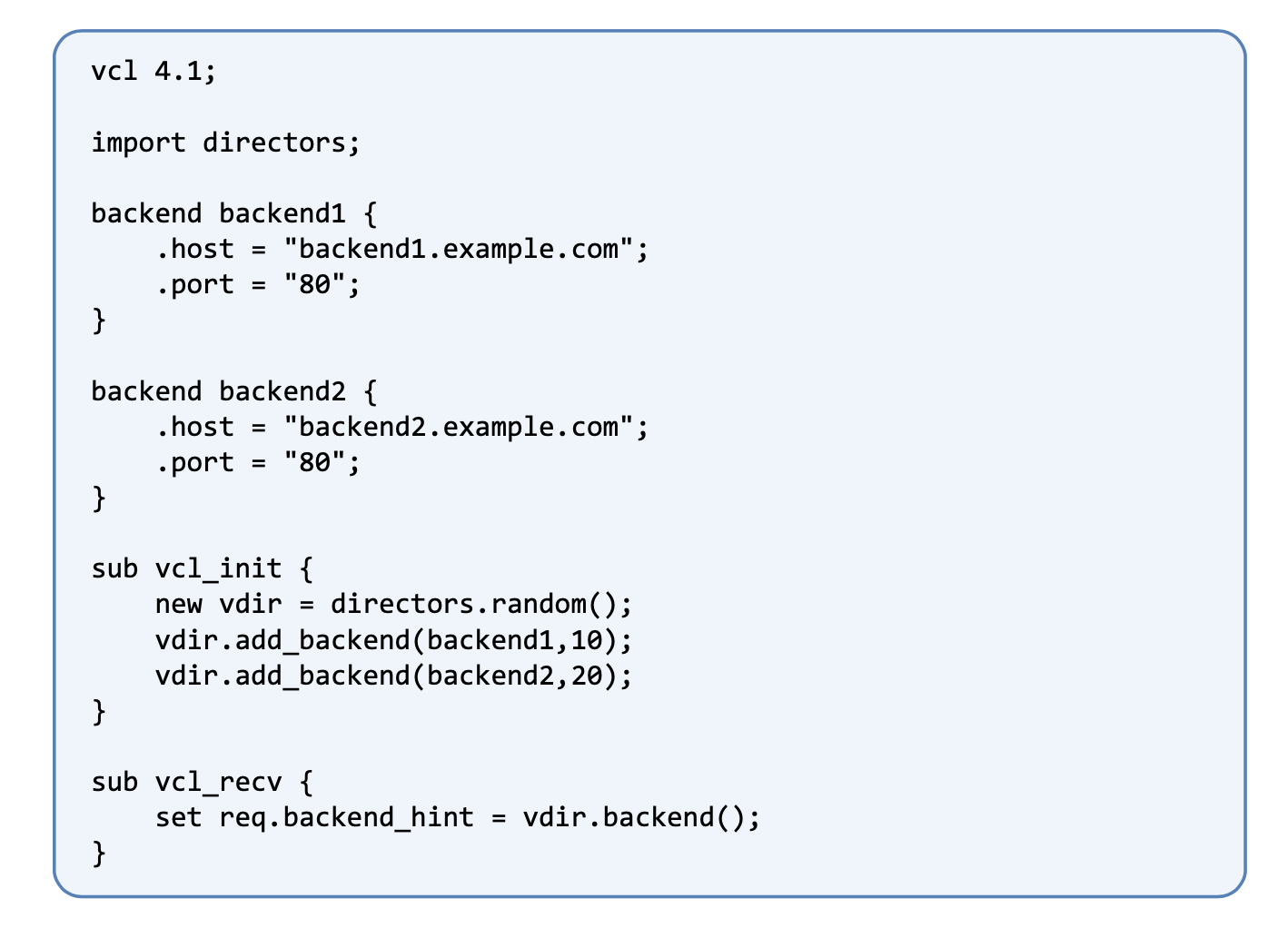

vmod_directors is a load-balancing VMOD.

In example 1, we initialize the director in vcl_init and choose the round-robin distribution algorithm to balance load across backend1 and backend2. In example 2, we’re going for a random distribution.

To achieve load balancing, a load balancer or proxy server, such as HAProxy or Nginx, is typically used. The load balancer sits between the clients and the Varnish Cache instances, distributing incoming requests evenly across the cache servers based on predefined algorithms like round-robin or least connections.

For high availability, a cluster of Varnish Cache instances is deployed in active-passive or active-active mode. In active-passive mode, one Varnish instance serves traffic while others remain on standby. If the active instance fails, the load balancer automatically redirects traffic to the next available cache server. In active-active mode, all Varnish instances actively handle incoming requests simultaneously, balancing the load across the cluster.

To ensure synchronization between the cache instances, a shared storage mechanism, like NFS or a distributed cache storage backend, is often employed. This allows all cache instances to share cached objects and maintain consistency across the cluster.

Additionally, implementing health checks helps the load balancer identify the health of individual Varnish instances and automatically remove unhealthy servers from the load balancing pool.

By setting up Varnish in a load-balanced and highly available environment, developers can achieve improved performance, scalability, and fault tolerance. This configuration ensures that Varnish Cache can handle high traffic volumes efficiently and provides a seamless user experience even in the face of server failures or maintenance events.

Varnish Cache and SSL/TLS

Configuring Varnish to work seamlessly with SSL/TLS encrypted traffic, including handling certificates and ensuring secure connections.

Configuring Varnish is essential for ensuring secure and encrypted communication between clients and the cache infrastructure. Here are the steps on how to achieve this:

Obtain SSL/TLS Certificates: Acquire SSL/TLS certificates from a trusted Certificate Authority (CA) for your domain. These certificates will be used to encrypt the communication between clients and Varnish.

Install Required Dependencies: Ensure that Varnish is compiled with the appropriate SSL/TLS support. Check for the presence of libraries like OpenSSL during the installation process.

Configure Varnish for SSL: Update the Varnish Configuration Language (VCL) to listen on the SSL port (usually 443). This involves specifying the backend server’s IP address or hostname and the appropriate port number where the encrypted requests will be forwarded.

Enable SSL/TLS: Enable SSL/TLS termination by configuring Varnish to use the SSL/TLS certificates obtained. Set up the “cert” and “key” paths in the VCL to point to the certificates and private key files.

Implement Forward Secrecy: To enhance security, enable Forward Secrecy (FS) by configuring Varnish to support modern and secure ciphers. This ensures that even if the private key is compromised in the future, past encrypted communications remain secure.

Configure HSTS Headers: Implement HTTP Strict Transport Security (HSTS) headers in Varnish’s VCL to instruct clients to access the website only via HTTPS. This prevents potential downgrade attacks.

Use OCSP Stapling: Enable Online Certificate Status Protocol (OCSP) stapling to improve the performance and security of certificate validation.

Load Balancing for SSL Offloading: If you are using a load balancer for SSL offloading, ensure that the load balancer forwards decrypted requests to Varnish for further caching and processing.

By following these steps, developers can seamlessly configure Varnish to work with SSL/TLS encrypted traffic. This enhances the privacy and security of data transmitted over the network and promotes user trust in a web application.

Varnish Cache and HTTPS

One challenge with Varnish Cache is that it is designed to accelerate HTTP, not the secure HTTPS. But increasingly websites are moving towards HTTPS for better protection against attacks. To enforce HTTPS with Varnish Cache developers have to add an SSL/TLS terminator in front of Varnish Cache to convert HTTPS to HTTP.

The most popular way to achieve this is by deploying Nginx as the SSL/TLS terminator. Nginx is a reverse proxy used to cache content like Varnish Cache, but the latter is much faster. Nginx allows HTTPS traffic, and when installed in front of Varnish Cache will perform the HTTPS to HTTP conversion. Developers should also install Nginx behind the Varnish Cache so that requests are converted back to HTTPS before going to the origin.

Monitoring and Metrics for Varnish

Tools and techniques for monitoring Varnish Cache’s performance, cache hit/miss ratios, backend response times, and other essential metrics.

Monitoring Varnish Cache’s performance is crucial to understanding its impact on web application efficiency and user experience. Several tools and techniques can help gather valuable metrics and insights:

Varnishstat: Varnish includes its built-in monitoring tool called “varnishstat.” It provides real-time statistics on cache hits, misses, backend connections, cache eviction, and more. Command-line access to “varnishstat” enables developers to quickly assess cache performance.

Varnishlog: Another built-in utility, “varnishlog,” offers a detailed log of incoming requests and VCL processing. It helps troubleshoot and analyze VCL configurations, providing visibility into request handling and potential bottlenecks.

Logging and Analytics: Collecting Varnish log data and analyzing it through external log management or analytics tools, such as Elasticsearch, Logstash, and Kibana (ELK Stack), allows for in-depth examination of traffic patterns, cache behavior, and response times.

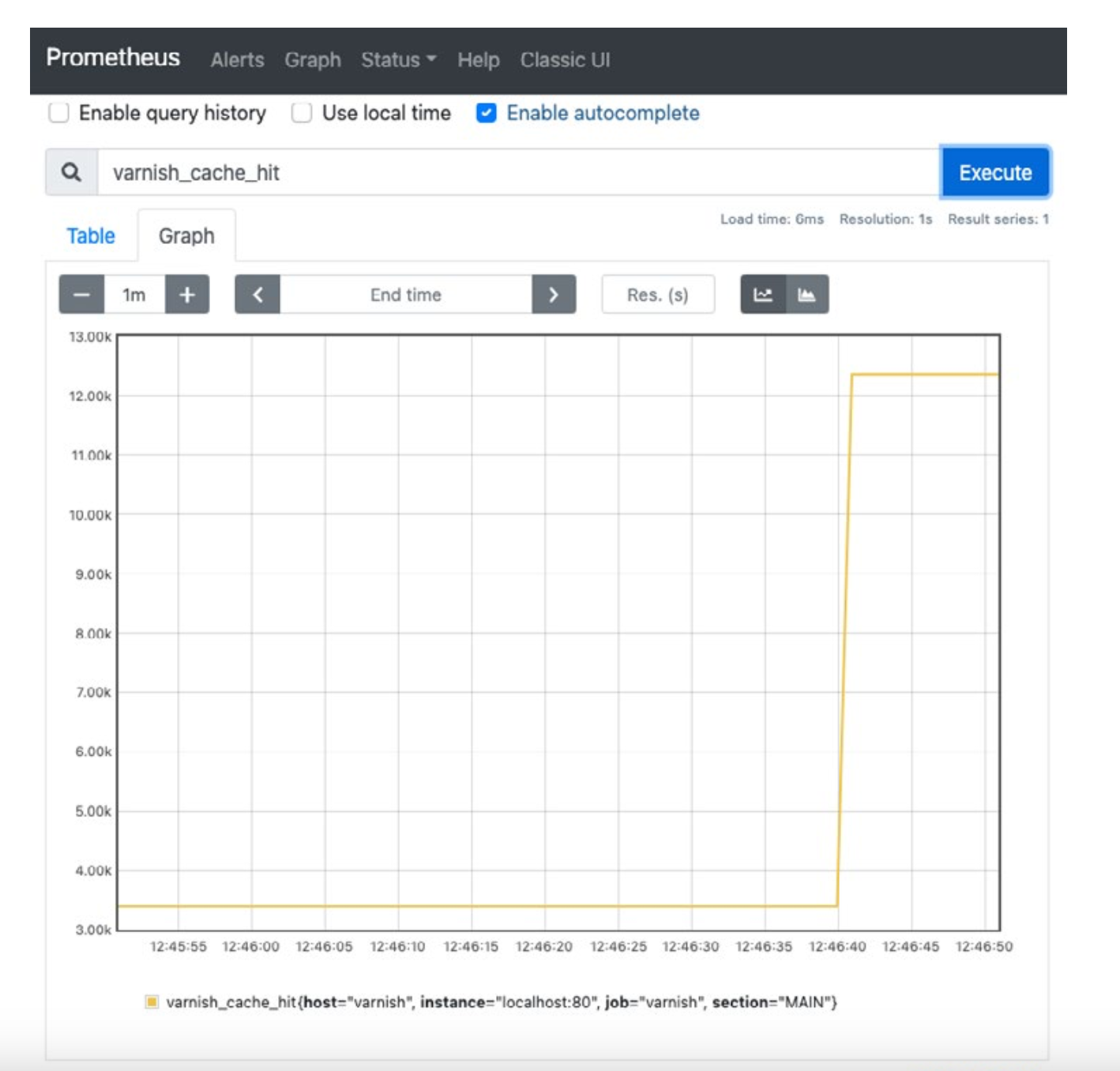

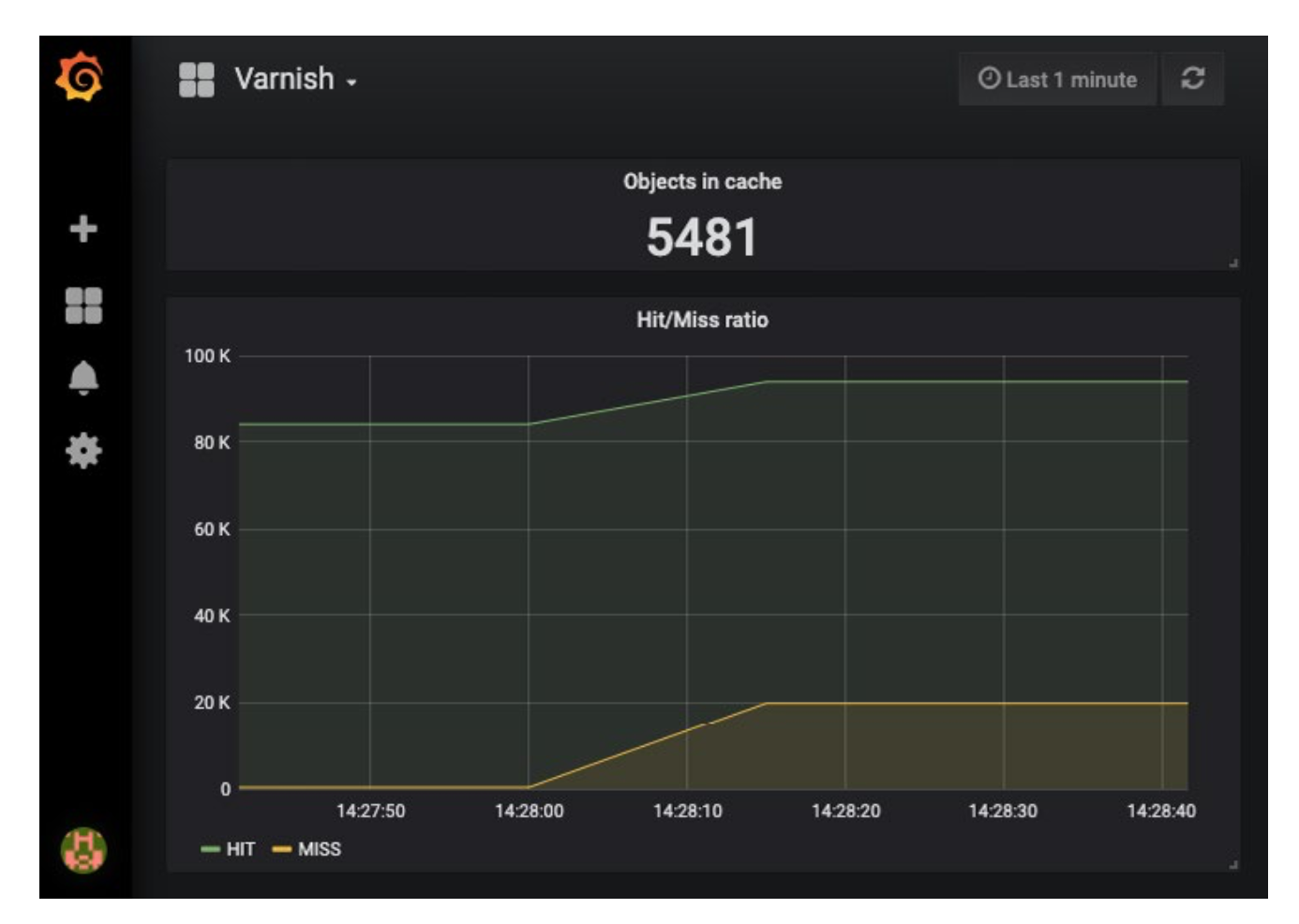

Varnish Dashboard: Build custom dashboards using monitoring systems like Grafana or Prometheus to visualize key performance metrics from Varnish Cache in real-time. This provides an intuitive and comprehensive view of cache hit/miss ratios, backend response times, and other vital statistics.

Prometheus dashboard

Grafana dashboard

Backend Monitoring: Monitor backend server performance to identify potential bottlenecks and assess how Varnish interacts with the origin servers. Monitoring tools like New Relic, Datadog, or Zabbix can help track backend response times and server health.

Caching Effectiveness: By measuring cache hit/miss ratios and cache efficiency, developers can assess how well Varnish serves cached content and identify opportunities for improvement.

Cache Invalidation Auditing: Monitor cache invalidation events to ensure that cached content is purged appropriately when necessary, preventing stale or outdated content delivery.

Load Testing: Conduct load testing using tools like Apache JMeter or Siege to simulate heavy traffic and measure Varnish Cache’s performance under stress conditions.

By employing these monitoring tools and techniques, developers can gain valuable insights into Varnish Cache’s performance, identify optimization opportunities, and ensure that it effectively improves website performance and user experience.

Cache Invalidation Strategies

Tools and techniques for monitoring Varnish Cache’s performance, cache hit/miss ratios, backend response times, and other essential metrics.

Varnish cache invalidation techniques are essential for ensuring that cached content remains up-to-date and accurate without causing unnecessary cache misses. Proper cache invalidation strikes a balance between serving cached content efficiently and promptly reflecting changes made to dynamic resources. Here are some common Varnish cache invalidation techniques:

TTL (Time-to-Live): Set a specific time duration, known as the Time-to-Live, for cached objects to remain valid. Once the TTL expires, Varnish automatically considers the content stale and fetches a fresh copy from the backend on the next request. Adjusting the TTL based on the content’s update frequency can minimize cache misses.

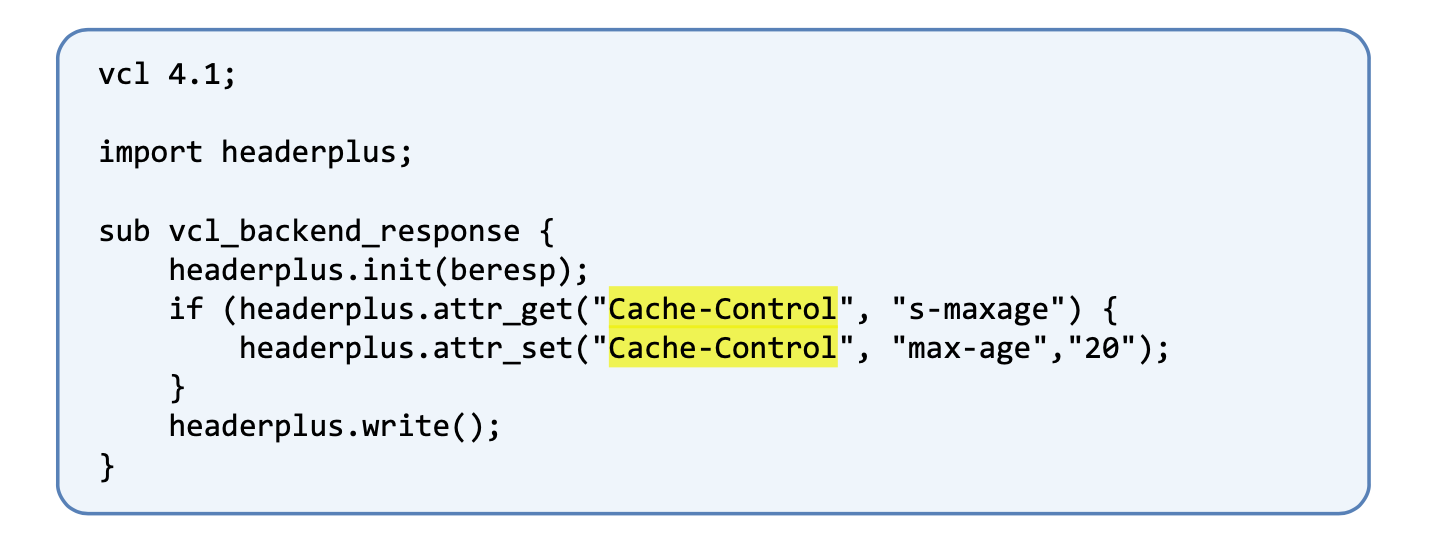

Cache-Control Headers: Leverage the Cache-Control header sent by the backend to instruct Varnish on caching behavior. By specifying cache-control directives like “max-age” and “s-maxage,” developers can control how long Varnish should cache the resource.

Cache-Control header

Cache Purging: Manually or programmatically purge specific cached objects when they are updated. Using Varnish’s ban or purge functionality, developers can invalidate specific URLs or patterns to remove stale content from the cache.

Conditional Requests: Use HTTP conditional requests, such as “If-Modified-Since” and “ETag” headers, to check if the cached content is still valid before serving it. This minimizes cache misses by serving the cached content only if it’s still up-to-date.

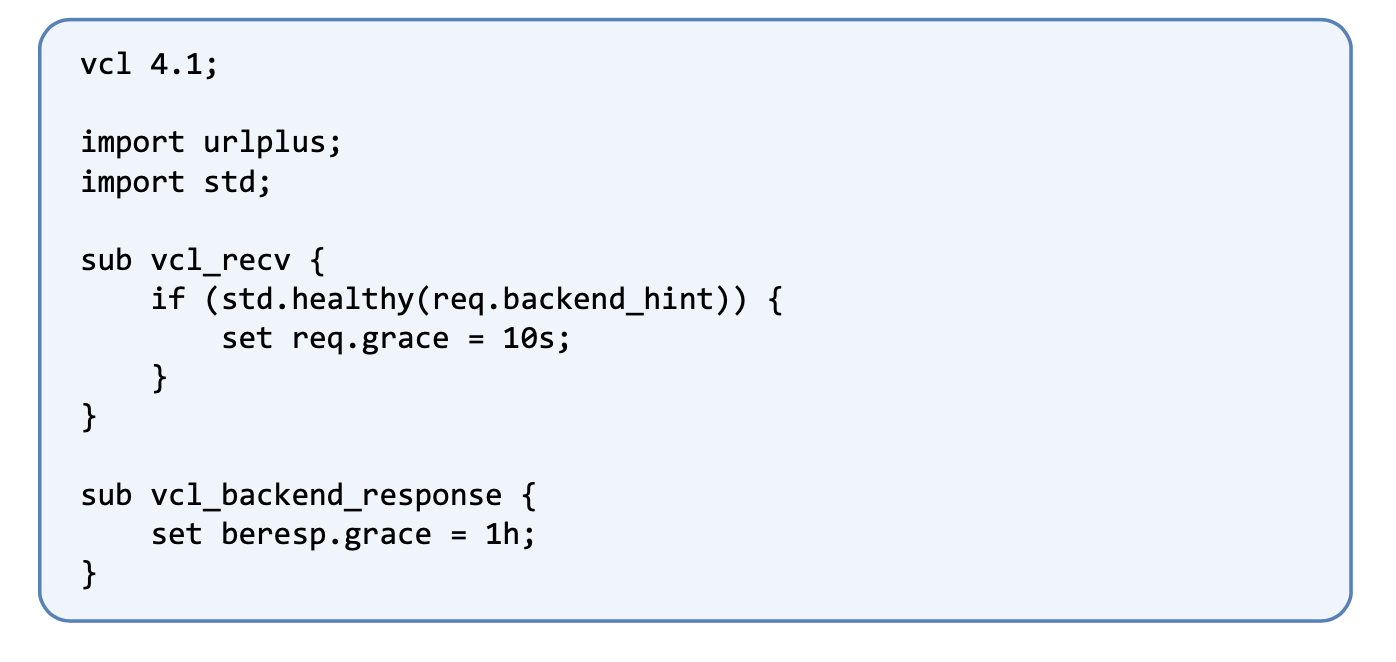

Grace Mode: Employ Varnish’s grace mode, which allows Varnish to serve stale content while fetching updated data from the backend. This ensures seamless delivery of content while reducing cache misses during backend fetches.

Using Grace Mode in VLC code

Edge-Side Includes (ESI): Utilize ESI to split web pages into fragments, enabling efficient caching of static components while fetching personalized or dynamic content separately. This way, only the dynamic fragments need to be updated, reducing the impact of cache invalidation on the entire page.

By implementing Varnish cache invalidation techniques, developers can maintain an effective caching strategy that ensures cached content remains up-to-date while minimizing unnecessary cache misses. This results in improved performance, reduced server load, and an enhanced user experience on the web application.

Varnish Cache for Ecommerce Websites

Leveraging Varnish Cache to optimize the performance of ecommerce sites, including handling dynamic content and personalization.

Leveraging Varnish Cache is highly beneficial for optimizing the performance of ecommerce sites, especially when dealing with dynamic content and personalized user experiences. Here’s how Varnish Cache can be utilized effectively:

Caching Static Content: Varnish can efficiently cache static elements like product images, CSS, and JavaScript, reducing the load on backend servers and speeding up page loads. This ensures that frequently accessed content is served from cache, resulting in faster and more responsive pages.

Edge-Side Includes (ESI): Implement ESI to cache different fragments of ecommerce pages separately. By caching static components while fetching personalized elements separately, Varnish can efficiently assemble personalized pages on-the-fly, combining cached and dynamic content.

Dynamic Content Caching: Configure Varnish to cache certain dynamically generated content, like product listings and search results, without compromising on real-time data accuracy. Using an appropriate cache TTL and cache purging techniques, developers can ensure that the cache remains up-to-date with relevant changes.

Session Handling: Employ Varnish to handle user sessions intelligently. By excluding session-specific data from caching or using ESI to cache personalized parts separately, Varnish can provide a personalized experience without serving the wrong content to multiple users.

Cache Invalidation Strategies: Develop robust cache invalidation strategies to promptly update cached content when products, prices, or inventory change. Utilize cache purging and conditional requests to ensure cache freshness while avoiding unnecessary backend requests.

Load Balancing: Implement Varnish in a load-balanced and highly available configuration to distribute traffic efficiently and handle sudden spikes in user activity during peak shopping periods.

Ecommerce Analytics: Integrate Varnish with ecommerce analytics tools to gain insights into cache performance, user behavior, and conversion rates. This information can guide further optimizations.

By leveraging Varnish Cache effectively, ecommerce sites can significantly improve website performance, reduce server load, and deliver a seamless and personalized shopping experience to their users. It enhances the overall user experience, boosts conversion rates, and strengthens customer loyalty, leading to a competitive edge in the ecommerce market.

What Content to Cache with Varnish Cache

For achieving optimum web performance, specific types of content should be cached with Varnish.

To achieve optimum web performance and ensure a seamless customer experience, Varnish Cache should be strategically utilized to cache specific types of content. The goal is to prioritize caching elements that contribute significantly to page load times and user interactions. Here are some key types of content that should be cached with Varnish:

Static Assets: Static resources like images (png, jpg, gif), CSS stylesheets & fonts, and JavaScript libraries should be cached as they rarely change and contribute to the bulk of a webpage’s size. Caching these assets ensures quicker load times and reduced bandwidth usage.

Full HTML Documents: The real value of Varnish Cache is in caching the HTML document. The HTML document has all the information needed to build a web page, including CSS files, text, and links to images. A slowly loading HTML document delays the time to first byte (TTFB) and start render time (SRT), adversely impacting user experience and SEO.

Frequently Accessed Pages: Frequently accessed pages, like homepages, product listings, and landing pages, should be cached to improve response times and reduce the load on backend servers.

Database Queries: Caching the results of complex database queries can help alleviate the load on the database server and expedite response times, especially for content that doesn’t change frequently.

API Responses: Cache responses from third-party APIs to minimize external requests, improve page load times, and ensure more consistent API performance.

Session-agnostic Content: Content that doesn’t rely on user sessions or personalization, such as blog posts or public information pages, can be effectively cached without risking data inconsistency.

Edge-Side Includes (ESI): Utilize ESI for personalized content. By caching static components and using ESI to assemble personalized fragments, Varnish can deliver dynamic content while benefiting from cached static elements.

Error Pages: Caching custom error pages ensures a smoother experience for users in case of unexpected errors and prevents unnecessary backend requests.

By focusing on caching these types of content with Varnish, websites can significantly enhance web performance, reduce server load, and provide a seamless and engaging experience for their customers. However, it’s essential to strike a balance between cache efficiency and serving the most up-to-date content, particularly for dynamic and personalized elements that require careful cache invalidation strategies.

Integrating Varnish Cache with a Content Delivery Network (CDN)

Combine Varnish Cache with a CDN to further improve website performance, reduce server load, and enhance global content delivery.

Integrating Varnish Cache with a Content Delivery Network (CDN) is a powerful strategy to supercharge website performance, reduce server load, and optimize content delivery on a global scale. By combining Varnish’s edge caching capabilities with a CDN, businesses can enhance their web application’s responsiveness and provide a seamless experience to users worldwide.

When Varnish Cache is deployed at the CDN edge nodes, it acts as an additional caching layer. This setup allows static content, such as images, CSS, and JavaScript, to be cached closer to end-users, significantly reducing latency and speeding up page loads. With cached content being served from nearby CDN servers, the origin server’s load is dramatically reduced, leading to improved server scalability and better handling of traffic spikes.

Moreover, Varnish Cache’s advanced caching mechanisms, coupled with the CDN’s distribution network, ensure that content remains available even during sudden surges in demand or server outages. The combination enables seamless failover and high availability, enhancing overall website reliability.

The integration also enhances the CDN’s performance by reducing the load on its servers, improving cache hit ratios, and minimizing the need for frequent requests to the origin server. This synergy results in a more cost-effective and efficient content delivery process.

The integration of Varnish Cache with a CDN empowers businesses to deliver content faster, provide a superior user experience, and optimize server resources. This powerful combination is especially valuable for global businesses seeking to provide consistent and high-performing web experiences to users across the world.

Though Varnish Cache is open source and free to download and install, there are several providers that offer Varnish Cache as a service or hosted versions of Varnish Cache for a fee. Webscale is the Intelligent CloudOps Platform offering 7 versions of unmodified Varnish Cache up to 5.1.2. If you have any questions about Varnish Cache and VCL, contact us and one of our Varnish Cache experts would be happy to help you.