For software companies, offering their software as a SaaS solution versus an on prem or cloud only model means;

- Shorter customer deployment times which equates to shorter time to revenue

- An opportunity to add upsell and cross sell product lines which means both increased revenue per customer, and a more complete (and therefore competitive) product offering.

However, companies looking to offer their software as a SaaS solution to a global customer base face a stark choice: treat customers outside of the primary geography as second-class citizens, or embrace a globally distributed deployment model.

TL;DR

Organizations are leveraging Webscale CloudFlow to create holistic SaaS offerings of their core software so their customers can adopt their software with just a DNS change.

Webscale CloudFlow Features for SaaSification

- Simple application deployment and management (Kubernetes Edge Interface)

- Webscale CloudFlow’s global footprint (Composable Edge Cloud),

- Add on security and performance upsell and cross sell modules

- Webscale CloudFlow’s simple integration points and reference application.

Examples Include:

- Quant CDN Using Webscale CloudFlow to build a new type of Content Delivery Network

- Wallarm Relies on Webscale CloudFlow to power it’s API Security Platform

- Optimizely Leveraging Webscale CloudFlow to build a global image optimization platform

- Drupal Association Powers their Drupal Steward security platform with Webscale CloudFlow

SaaSification Overview

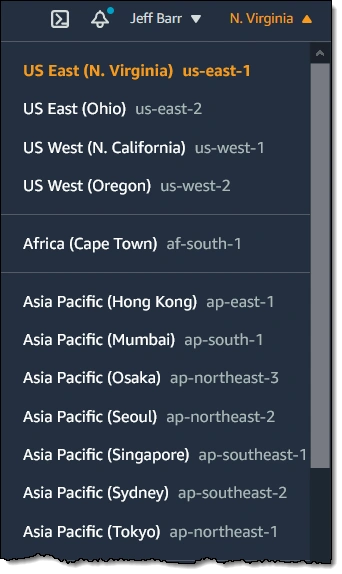

For organizations that are early in their maturity, the first option is typically the default choice. These companies will pick a particular cloud instance for deployment, such as AWS EC2 US-East, and customers within or close to that region will enjoy a premium experience. As customers get geographically further from that particular region, application performance, responsiveness, resilience and availability will naturally degrade. In short, this is the default “good enough” cloud deployment focused largely on a home-grown user base.

Image sourced from AWS

As companies get serious about their global business opportunities, they increasingly shift to the second choice by moving part or all of their application (and its data) closer to the users. This geographic proximity typically improves the user experience across the board. It is, in a nutshell, the beginning of an edge compute model.

Unfortunately, while offering substantial technical and business benefits, edge computing can also be considerably more complex to manage than a centralized cloud instance. In part, this is because it strives to treat global users as true first-class customers, and thus must take their particular requirements into account. Let’s look at some of the key considerations, and then talk about how to address them.

Regulatory compliance

Different countries and regions have unique rules and regulations, and truly global deployments must take these into consideration. A notable example is the European Union’s General Data Protection Regulation (GDPR), which governs data protection and privacy for any user that resides in the European Economic Area (EEA). Importantly, it applies to any company, regardless of location, that is processing and/or transferring the personal information of individuals residing in the EEA. For instance, GDPR may regulate how and where data is stored for your application. Moreover, many countries have copied the protections of GDPR for their own citizens.

While the intricacies of regulatory compliance are outside the scope of this post, it’s important to recognize that these requirements exist, and that organizations should partner with network and compute providers that have experience adhering to compliance standards.

Geographic workload and performance optimization

Global application usage varies in the same way it varies for any one particular geography, that is to say: by time and customer density. Users wake up, log on, finish their day and log off, and usage is heaviest where the most target customers are located. At the global level, the difference is one of scale: usage patterns generally follow the sun and dense population centers can be simultaneously “online” but geographically far flung.

From a compute perspective, this means that systems must be optimized to account for these global variances, both from a performance standpoint, as well as a cost efficiency standpoint. In fact, beyond just “optimized”, global networks should be actively and intelligently tuned to account for shifting usage patterns in real time.

For example, Webscale CloudFlow’s patented Adaptive Edge Engine (AEE) focuses on compute efficiency by using machine learning algorithms to dynamically adapt to real-time traffic demands. By spinning up compute resources and making deployment adjustments, the AEE places workloads and routes traffic to the most optimal locations at a given time. So, rather than a team of site reliability engineers (SREs) continuously monitoring and adjusting resources and routing, companies have the advantage of leveraging intelligent automation across a distributed edge network.

Global network coverage

A closely related consideration is network coverage and accessibility. Simply put, are edge compute resources available where my users need them to be? Partnering with a single vendor whose coverage doesn’t extend where needed is very limiting, yet aggregating different vendors into a “global” network can significantly increase complexity, cost, and risk. This is especially true if those vendors have different compute, deployment, and observability parameters, and you’re the one that needs to manage around those differences. Similarly, in light of the above performance optimization, are you the one who has to manage the spinning up and spinning down of compute resources across different vendors to cover different geographies?

An equally important consideration: does my chosen partner(s) cover not only where I need to be today, but where I am likely to see future growth? And if they do, are they able to dynamically adapt compute resources across their network(s) to adapt to real-time traffic demands?

Global observability

When it comes to DevOps, the last thing organizations want is to discover performance or security issues after the fact based on user complaints, yet this observability isn’t easy to achieve across distributed systems. Ideally, organizations need real-time visibility into traffic flows and time series to evaluate performance and diagnose issues. Logs should be easy to consume and search as needed. Performance metrics need to be easy to visualize and available not only in real time, but also at varying levels (network, domain, and edge service) to quickly identify patterns.

Global resilience

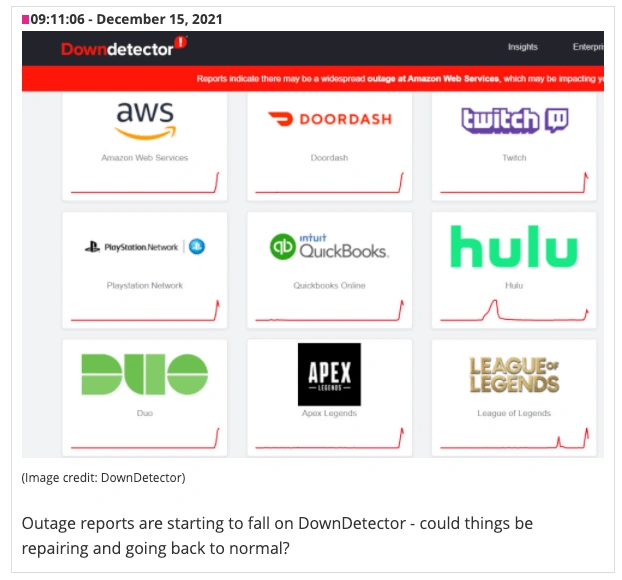

While the ability to spot issues is critical, it’s better that these issues – at least as they’re related to the edge compute platform – not surface in the first place. Yet it’s an unfortunate reality that provider networks do, occasionally, go down. Witness the recent AWS outages that took down a wide variety of applications in the U.S.

This points to the notion of building for resiliency at the global level; if a single provider network goes down, there should be built-in fault tolerance to redirect to other providers. Ideally, this resilience and fail-over should be automated based on health checks that detect outages and dynamically reroute traffic to healthy endpoints.

SaaSification with Webscale CloudFlow

While it is certainly possible to self-manage global application deployment, most software organizations don’t want to get into the business of managing the intricate complexities of distributed networks. With Webscale CloudFlow, software vendors can leverage an out-of-the-box solution that gives them all the benefits of global deployment without the burden of building and managing it. The Webscale CloudFlow platform:

- Distributes Kubernetes clusters across a vendor-agnostic global network of leading infrastructure providers;

- Intelligently tunes your edge network to ensure workloads are in the optimal locations to maximize performance and cost benefits, and;

- Includes built-in observability, resilience and security needed to make sure your application stays up and running.

On top of that, Webscale CloudFlow’s Recommended Partners offers a number of possible add-on services (e.g. web application firewall, performance optimization, A/B testing, etc.), to extend the value of your software.

Ultimately, Webscale CloudFlow gives organizations all the business and performance benefits of global deployment, while allowing them to concentrate on their core business rather than distributed network management.