Over the last fifteen years or so, developers have become very familiar with cloud deployment. Whether using AWS, Azure, GCP, Digital Ocean, or a more niche provider, the dev experience is fairly similar, no matter which cloud you’re on. For a developer, cloud workloads typically include:

- Identifying where your highest concentration of users are and selecting a single cloud location that will deliver the best performance to the maximum number of users.

- Connecting your code base, hosted in code repository tooling (e.g. Github, GitLab, Bitbucket)

- Automating build and deployment through CI/CD tooling (e.g. Jenkins, CircleCI, Travis CI)

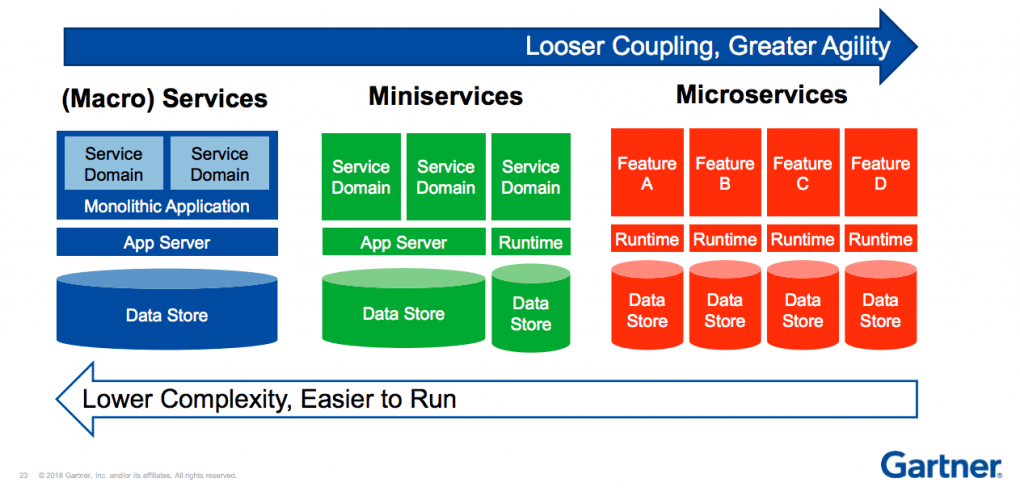

These processes are fairly straightforward when all code and microservices are feeding into a single deployment endpoint. However, what happens when you add hundreds of edge endpoints to the mix, with different microservices being served from different edge locations at different times? In this kind of environment, how do you decide which edge endpoints your code should be running on at any given time? More importantly, how do you manage the constant orchestration across these nodes among a heterogeneous makeup of infrastructure from a host of different providers?

Image source: Gartner

In this blog, we’ll look at some of the complexities involved in streamlining the developer experience around edge deployment and ongoing management.

The Edge Developer Experience: Managing Complexity

As edge computing gains traction both in its own right and as part of a hybrid cloud infrastructure, increasing numbers of developers are being asked to program in a new computing paradigm. Building for the edge is a complex and always shifting puzzle to be solved and then managed.

Some of the complexities surrounding the edge developer experience include:

- Code and configuration management

- Edge runtimes

- Distributed diagnostics and telemetry

- Application lifecycle management

Code and Configuration Management

Every application is unique. Developers need granular, code-level control over edge configuration to fit the unique needs of their application. At the same time, they require simple, streamlined workflows to continue to push the pace of innovation and maintain secure, dependable application delivery.

With various industry players competing for a share of the edge computing market, from hyperscalers to CDNs, there are many considerations for evolving the developer experience to adapt to edge nuances.

For example, many traditional CDNs have hard-coded proprietary software into their solutions (e.g. web application firewall technology), offering very limited configuration options. Thus, developers can find themselves backed into a corner with legacy CDNs offering edge services, forcing them to bolt on additional solutions that inevitably erase some of the benefits they were seeking to solve with the CDN solution in the first place.

Furthermore, developers are increasingly looking to migrate more application logic to the edge for performance, security, and cost-efficiency gains. A few examples of workloads moving to the edge include:

- Micro APIs – Hosting small, targeted APIs at the edge for use cases such as search or fully-featured content exploration with GraphQL (which enables faster responses on user queries while lowering costs).

- Headless Commerce – Decoupling the presentation layer from back-end eCommerce functions allows you to push more services to the edge to create custom user experiences, achieve performance gains, and improve operational efficiencies.

- Full application hosting at the edge – More and more developers are exploring the idea of hosting their entire application at the edge. Rather than beaconing back to a centralized origin, hosting databases alongside apps at the edge and then syncing across distributed endpoints is quickly becoming a reality that has the potential to become the new normal as edge computing matures.

The types of workloads being considered for edge deployment are many and diverse. In order for developers to progress towards migrating more advanced workloads to the edge, they require flexible solutions that support distribution of code across programming languages and frameworks.

Edge Runtimes

Runtime describes the final phase of the program lifecycle, which involves the machine executing the program’s code. As more developers continue to adopt edge computing for modern applications, edge platforms and infrastructure will need to support different runtime environments.

Complexity factors into runtimes at the edge in relation to interoperability, i.e. the complexity of managing runtimes across distributed systems. Developers need to be able to run applications in their dedicated runtime environment with their programming language. Systems that support diverse developer needs, therefore, must be able to support this to be useful to all.

One of the most widely used runtime environments for JavaScript is Node.js, used by many businesses, large and small, to create applications that execute JavaScript code outside a web browser. Some other well-known examples of runtime environments include the Java Runtime Environment, a prerequisite for running Java programs, .NET Framework which is required for Windows .NET applications, and Cygwin, a runtime environment for Linux applications that allows them to run on Windows, macOS, and other operating systems.

With developers building across many different runtime environments, Edge as a Service offerings need to be able to support code portability. We can’t expect developers to refactor their code base to fit into a rigid, pre-defined framework. Instead, multi-cloud and edge platforms and services must be flexible enough to adapt to different architectures, frameworks and programming languages.

Distributed Diagnostics and Telemetry

It is critical for developers to be able to have a holistic understanding of the state of their application at any given time. Observability becomes increasingly complex when you consider distributed delivery nodes across a diverse set of infrastructure from different providers.

As reported in a Dynatrace survey of 700 CIOs, “The dynamic nature of today’s hybrid, multicloud ecosystems amplifies complexity. 61% of CIOs say their IT environment changes every minute or less, while 32% say their environment changes at least once every second.” To add to the complexities of trying to keep up with dynamic systems, that same report revealed that:

“On average, organizations are using 10 monitoring solutions across their technology stacks. However, digital teams only have full observability into 11% of their application and infrastructure environments.”

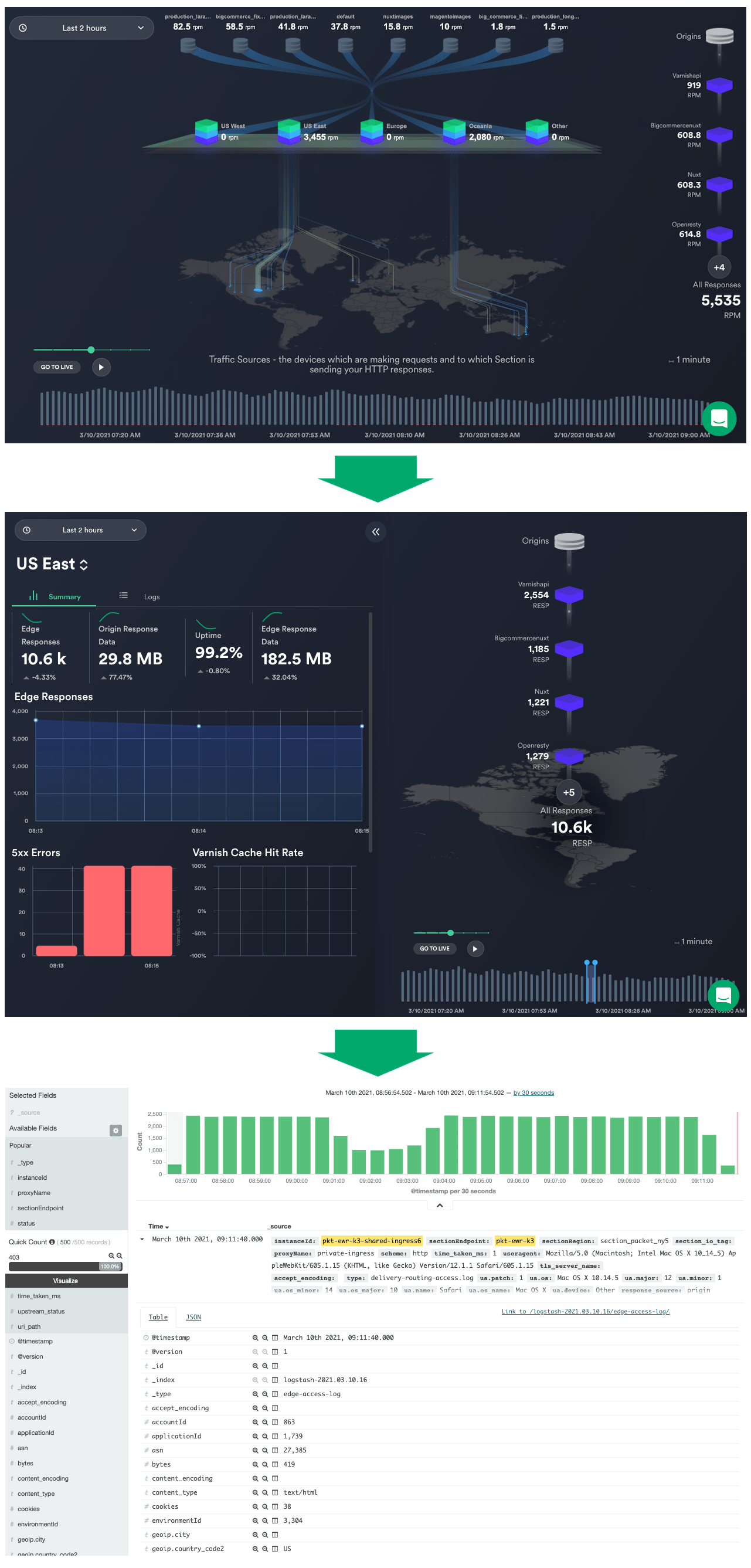

An effective solution for multi-cloud/edge observability should be able to provide a single pane of glass to draw together data from many locations and infrastructure providers. This kind of visibility is essential for developers to gain insight into the entire application development and delivery lifecycle. The right centralized telemetry solution will allow engineers to evaluate performance, diagnose problems, observe traffic patterns, and share value and insights with key stakeholders.

At Webscale, we conducted quite a bit of user research leading up to the build of our Traffic Monitor which gives engineers, application owners and business leaders a straightforward way to quickly see how traffic is flowing through their edge architecture. What we found across interviews with development teams is that they crave tooling that enables them to understand what’s going on with their application traffic and edge/multi-cloud workloads at a glance, while also giving them the ability to easily drill down deeper into individual logs when needed.

For example, the Traffic Monitor provides an easy way for DevOps teams to quickly observe errors across their edge stacks, then click to drill into the root cause of those errors without having to form complex queries to get the information they need.

Centralized Application Lifecycle

Along with configuration flexibility/control and comprehensive observability tooling, developers need to be able to easily manage their application lifecycle systems and processes. With a single developer or small team overseeing a small, centrally managed code base, this is fairly straightforward. However, when an application is broken up into hundreds of microservices that are managed across teams, coupled with a diverse makeup of deployment models within the application architecture, this can become exponentially complex and impact the speed of development cycles.

When we add the additional complexities of pushing code to a distributed Edge and maintaining code application cohesion across that distributed application delivery plane at all times (even during an application deployment cycle), application lifecycle management for edge becomes vastly more complex than the centralized approaches used to date with cloud.

GitOps

To streamline operations, many teams take advantage of GitOps workflows. GitOps is a way of implementing Continuous Deployment for cloud native applications. It focuses on a developer-centric experience when operating infrastructure, by using tools developers are already familiar with, including Git and Continuous Deployment tools.

While responsibilities and oversight of different parts of an application’s code may be siloed within an organization, all of the code needs to feed into a unified code base. In order for developers to be able to move more services to the edge, they need tooling that is underpinned by GitOps principles for a fully integrated edge-cloud application lifecycle.

CI/CD

As an integral part of GitOps workflows, developers need flexibility and control when it comes to integrating edge deployment processes into existing continuous integration/continuous delivery (CI/CD) pipelines.

There are three main principles worth following when managing changes to your edge configuration through a CI/CD pipeline:

- Optimize for fast feedback. Identify steps within the pipeline that need optimizing by tracking execution time on individual stages. From the time you push a change to version control to making the change live should take no longer than five minutes. Fast feedback is important for quickly ensuring your changes meet business needs and cutting out technical debt and unnecessary costs.

- Chunk your changes, test immediately. Instead of changing multiple things in batches and then testing for the effect, interleave the changes and tests, and stop execution immediately if the tests fail. By turning changes into small, verifiable units, you lessen the risk factor.

- Push all changes through the pipeline. You lose the benefits you’re striving for if you accommodate changes outside the process.

Delivery and maintenance of code to distributed edge infrastructure is more difficult to execute with the speed and consistency required to achieve the same application experience for end users at all times as if they were all connecting to one centralized application in the cloud.

Consistency is Key

The key to accelerating edge computing adoption is making the experience of programming at the edge as familiar as possible to developers, explicitly drawing on concepts from cloud deployment to do so. The added complexities that a distributed edge deployment brings introduces new challenges to achieving consistency across these experiences.

“When we look at the challenges of scale and operational consistency, the edge cannot be seen as a point solution that then needs to be managed separately or differently across hundreds of sites – this would be incredibly complex. In order to be successful, you need to manage your edge sites in the same way you would the rest of your places in the network – from the core to the edge. This helps minimize complexity and deliver on the operational excellence that organizations are striving for.”

Rosa Guntrip, Senior Principal Marketing Manager, Cloud Platforms, Red Hat

The right Edge as a Service platform will help minimize complexity, enabling developers to focus on innovation and executing mission-critical tasks instead of juggling all the pieces involved in managing edge/multi-cloud workloads.

Be sure to check out the next piece in this series How to Approach Application Selection, Deployment, and Management for the Edge