This is the final post in a three-part series digging into the complexities that developers and operations engineers face when building, managing, and scaling edge architectures. In the first post, we looked at the complexities of replicating the cloud developer experience at the edge. In the second part, we discussed how to approach application, selection, deployment, and management for the edge. In this post, we’ll focus on the complexities of managing the network, infrastructure, and operations in a distributed compute environment.

We are in the midst of a foundational technological shift in communications infrastructure. IDC predicts that by 2023, more than 50% of new enterprise IT infrastructure will be deployed at the edge and the edge access market is predicted to drive $50 billion in revenues by 2027. To interconnect this hyper-distributed environment, which spans on-premise data centers, multi-clouds, and the edge, the network is in the process of evolving and becoming more agile, elastic, and cognitive.

Let’s dive into a high-level overview of some of the critical components you need to consider when building and operating distributed networks. Areas that we’ll cover include:

- DNS

- TLS

- DDoS mitigation (Layers 3, 4, 7)

- BGP/IP address management

- Edge location selection and availability

- Workload orchestration

- Load shedding and fault tolerance

- Compute provisioning and scaling

- Messaging framework

- Edge operations model and team

- Observability

- NOC integration

As you’re evaluating all of these considerations, bear in mind that working with Edge as a Service can solve many of these complexities for you.

DNS: A Critical Component in Networking Infrastructure

The domain name system (DNS) is often referred to as the phonebook of the Internet since it translates domain names to IP addresses, allowing browsers to load Internet resources. DNS provides the hierarchical naming model, which lets clients “resolve” or “lookup” resource records linked to names. DNS therefore represents one of the most critical components of networking infrastructure.

DNS Services are Often Vulnerable to Threats

Other widely used Internet protocols have started to incorporate end-to-end encryption and authentication. However, many widely deployed DNS services remain unauthenticated and unencrypted, leaving DNS requests and responses vulnerable to threats from on-path network attackers. Hence, when building out DNS services, it’s critical to maintain a security-first approach.

DNS in a Distributed Computing Environment

DNS is lightweight, robust and distributed by design. However, new approaches to computing architecture, including multi-cloud and edge, introduce new considerations when implementing application traffic routing at the DNS level.

In a distributed compute environment, DNS routing entails ensuring that users are routed to the correct location based on a set of given objectives (e.g. performance, security/compliance, etc.). When routing, you need to take into account service discovery. The majority of the time, people use DNS for public-facing service discovery, but this can be challenging to pull off. Routing is complicated both in terms of ensuring that users are routed to the right location and that failover is handled – in other words, what will you do to help update routes when systems fail?

CloudFlow’s Approach to DNS, Failover, and Service Discovery

As an Edge as a Service provider, CloudFlow uses a combination of managed DNS services for routing: Amazon Route 53 and NS1, which, when used in combination with CloudFlow’s Adaptive Edge Engine workload scheduler, allows us to determine which endpoints users are routed to. NS1 provides a broader and more granular set of filters, which enable state-based latency routing. (Its “up” filter is a particularly useful feature for multi-CDN or multi-cloud architecture.)

“NS1 brings automation, velocity, and security to modern application development and delivery. Enterprise infrastructure is evolving faster than ever before. Emerging technologies make it possible to spin up microservices and cloud instances in minutes. DevOps teams are churning out code 40 times faster than legacy production environments. New edge and serverless architectures are taking computing out of the data center and closer to devices enabling global real-time applications. Those organizations not born in the cloud-native era face the additional challenge of connecting legacy applications with new technology in the never-ending race to meet user demands for performance while driving efficiency and security.”Kris Beevers, CEO, NS1

By working with managed DNS services that are at the forefront of new developments, CloudFlow keeps applications available, performant, secure, and scalable with integrated Anycast DNS hosting, while also removing the burdens of configuration, security, and ongoing management.

TLS: Provisioning, Management, and Deployment Across Distributed Systems

Transport Layer Security (TLS) is an encryption protocol, which protects communications on the Internet. You can feel reassured that your browser is connected via TLS if your URL starts with HTTPS and there’s an indicator with a padlock assuring you that the connection is secure. TLS is also used in other applications, such as email and usenet. It’s important to regularly upgrade to the latest protocol for TLS and its predecessor, the SSL protocol.

When working with TLS and/or SSL in distributed environments, you have to either work with your own certificates using a managed service such as DigiCert or use an open source version with a service like Let’s Encrypt or Certbot. The managed certificate authorities such as DigiCert will provide automated tooling to provision certificates. With the open source versions, you will find that you have to build the services to manage auto-renewal components and the provisioning of new certificates.

An added complexity is the question of how you deploy these protocols? In relation to distributed systems, you will have certificates that need to be running in multiple places. They might be running across multiple providers, for example, you might be using one specific ingress controller in one location and a different ingress controller in another. How do you ensure that your certificates are being deployed where needed in order to actually handle the TLS handshakes? And as the number of domains that you manage increases, so too do the complexities.

This ties directly back to DNS since you need to ensure that you’re routing traffic to the correct endpoints containing the workloads where your TLS certificates are deployed. Further, you will have to take into account the state of your systems at any point in time and how you route traffic, since you never want to be servicing users incorrectly.

Ultimately, servicing your user correctly is the end goal, meaning that when implementing TLS at the edge yourself, you have to take into account all these different components.

CloudFlow’s Edge as a Service includes advanced, managed SSL services to procure, install, renew, and test SSL/TLS certificates for your web applications.

DDoS: Protecting Layers 3, 4, and 7

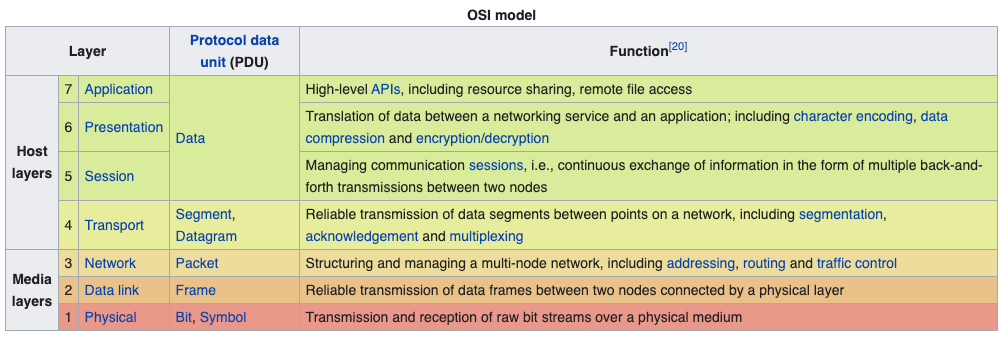

When protecting against Distributed Denial of Service (DDoS) attacks across distributed systems, the first question to ask should be, where are my systems most vulnerable to attack? The primary layers of focus for protecting against DDoS attacks include Layers 3, 4, and 7 in the OSI model.

Firms like Wallarm, Signal Sciences, ThreatX, and Snapt will provide DDoS protection for you at the application layer (i.e. Layer 7), and when you deploy these through Edge as a Service providers like CloudFlow, you’re able to leverage a distributed deployment model out-of-the-box.

However, in an edge computing paradigm that’s made up of heterogeneous networks of providers and infrastructure, there are more questions that need asking. The most important: how do all the different providers I’m using handle network and transport-layer DDoS attacks (i.e. Layers 3 and 4)?

All major cloud providers typically have built-in DDoS protection, but when you begin to expand across a multi-cloud environment, and even further out to the edge, you need to ensure that your applications are protected across the entire network. This includes knowing how each underlying provider handles DDos protection, along with implementing safeguards for any areas in your networks that may be underprotected. This also takes us back to DNS and the question of how to handle traffic routing when one (or more) of your endpoints becomes compromised.

CloudFlow’s Adaptive Edge Engine works in conjunction with best-in-class WAF offerings, along with advanced DDoS mitigation to ensure your applications are always protected across your entire compute environment.

BGP/IP Address Management

The Border Gateway Protocol (BGP) is responsible for examining all the available paths that data can travel across the Internet and picking the best possible route, which usually involves hopping between autonomous systems. Essentially, BGP enables data routing on the Internet with more flexibility to determine the most efficient route for a given scenario.

BGP is also widely considered the most challenging routing protocol to design, configure, and maintain. Underlying the complexities are many attributes, route selection rules, configuration options, and filtering mechanisms that vary among different providers.

In an edge computing environment, rather than announcing IP addresses from a single location, BGP announcements must be made out of multiple locations, and determining the most efficient route at any given point becomes much more involved.

Another important consideration when it comes to routing is load balancing at the transport layer (Layer 4). Building a Layer 4 load balancer is complicated for the following reasons:

- It must support BGP announcements.

- You need to own the IP space, which can be very costly.

- You need to understand BGP, which may require a team of network engineers to truly manage the system.

- You need to be able to announce in locations all around the world (which are also the most peered locations).

- Finally, you need to take into account where you’re load balancing traffic to. The distributed system that you’re running applications on must be able to support packets that are being load balanced from the load balancer in front.

The complexities of routing across multi-layer edge-cloud topologies are perhaps the most daunting when it comes to building distributed systems. This is why organizations are increasingly turning to Edge as a Service solutions that take care of all of this for you.

Edge Location Selection and Availability

An effective presence at the edge is based on having a robust location strategy. By moving workloads as close as possible to the end user, latency is reduced. Selecting the appropriate geographies for your specific application within a distributed compute footprint involves careful planning.

At Webscale, we operate an OpEx model and benefit from a very different kind of network to cloud providers and traditional content delivery networks. Our flexible strategies and workflows allow us to tailor the correct edge network for each of our customers, delivering on performance gains and reducing costs for them. The CloudFlow Composable Edge Cloud is built on the foundations of AWS, GCP, Azure, Digital Ocean, Lumen, Equinix Metal, RackCorp, and others. We regularly add more hosting providers and have the capacity to deploy endpoints based on the specific needs of our customers who want to define their own edge.

Compliance also plays a role in the selection of edge locations. Increasingly, regulations and compliance initiatives, such as GDPR in Europe, are requiring companies to store data in specific locations. Edge as a Service providers with flexible edge networks enable DevOps teams to be precise about where they want their data to be processed and stored, without the burdens associated with ongoing management.

Workload Orchestration

Managing workload orchestration across hundreds, or even thousands, of edge endpoints is no simple feat. This can involve multiple components. You need to start with where you want the workload to be defined (e.g. full application hosting, micro APIs, etc.) Next, ask where will it be stored? Finally, take into account how the workload is actually deployed. How do you determine which edge endpoints your code should be running on at any specific time? What type of automation tooling and DevOps experience do you need to ensure that when you make changes, your code will run correctly?

Managing constant orchestration over a range of edge endpoints among a diverse mix of infrastructure from a network of different providers is highly complex. To migrate more advanced workloads to the edge at a faster rate, developers are increasingly turning to flexible Edge as a Service solutions, which support distribution of code across multiple programming languages and frameworks.

Load Shedding and Fault Tolerance

A load shedding system provides improved fault tolerance and resilience in message communications. Fault tolerance allows a system to continue to operate, potentially at a reduced level, in the event of a failure within one or more of its components.

In regards to load shedding and fault tolerance at the edge, the primary area of concern is ensuring that the systems handling your workloads and servicing requests aren’t overloaded. Essentially, how do you make sure that one location isn’t set up to infinitely scale and how do you ensure that load is distributed appropriately?

Compute Provisioning and Scaling

Load shedding and fault tolerance brings us on to auto scaling and configuring auto scaling systems. One of Kubernetes’ biggest strengths is its ability to perform effective autoscaling of resources. Kubernetes doesn’t support just one autoscaler or autoscaling approach, but three:

- Pod replica count – This involves adding or removing pod replicas in direct response to changes in demand for applications using the Horizontal Pod Autoscaler (HPA).

- Cluster autoscaler – As opposed to scaling the number of running pods in a cluster, the cluster autoscaler is used to change the number of nodes in a cluster. This helps manage the costs of running Kubernetes clusters across cloud and edge infrastructure.

- Vertical pod autoscaling – the Vertical Pod Autoscaler (VPA) works by increasing or decreasing the CPU and memory resource requests of pod containers. The goal is to match cluster resource allotment to actual usage.

If you’re not using a container orchestration system like Kubernetes, compute provisioning and scaling can get very challenging very quickly.

Messaging Framework

The messaging system provides the means by which you can distribute your configuration changes, cache ban requests, and trace requests to all your running proxy instances in the edge network or CDN, and report back results.

This involves two primary components:

- Workload orchestration

- Receiving updates – if your system needs to receive a message or update, how do they get it, and is it getting there reliably?

At Webscale, we rely on MCollective for this, a framework that allows us to build server orchestration or parallel job execution systems, meaning we can programmatically execute administrative tasks on clusters of servers.

Edge Operations Model and Team

To truly manage distributed network, infrastructure and operations at the Edge, you will likely need an edge operations model with an experienced team comprised of:

- Network engineers

- Platform engineers

- DevOps engineers, with an emphasis on site reliability engineering (SRE)

If you don’t have some or all of these specialists, or don’t have the expertise or resources in edge computing to manage the network, infrastructure, and operations, you can work with an Edge as a Service provider whose solutions abstract away many of the complexities associated with edge computing.

Observability

It’s imperative to treat observability as a first-class citizen when designing distributed systems, or any system for that matter. Reliable and real-time information is critical for engineers and operations teams who need to understand what is happening with their applications at all times.

Observability is also a key element in disaster recovery planning and implementation. Your infrastructure needs to be observable and flexible so that you can understand what has broken and what needs to be fixed. If a critical error occurs, you need visibility into your system to keep the incident as brief as possible versus experiencing a protracted disaster.

Observability is a cornerstone of Edge as a Service offerings. As part of a comprehensive edge observability suite, the CloudFlow Console includes the ELK stack (Elasticsearch, Logstash and Kibana) for storing, searching and visualizing logs for your application and each of your edge modules, along with a next-gen Traffic Monitor, which gives DevOps engineers, application owners and business execs a straightforward way to visualize how traffic is flowing across their edge architecture.

NOC Integration

Enterprises are taking steps to unify their network operations centers (NOCs) and security operations centers (SOCs). Why? By creating alignment between these two often siloed teams, organizations can reduce costs, optimize resources and improve the speed and efficiency of incident response and related security functions.

You need to take into account the expertise of your team when planning a NOC and/or SOC integration. Not everyone will have the range of crossover experience necessary to pull off a successful integration.

Conclusion

While we’ve covered a lot in this overview, this is just a subset of the critical components and complexities that come with building and operating cloud-edge networks and infrastructure; and we really only scratched the surface on each of the considerations included above.

Every organization, team, and application has unique requirements when it comes to designing distributed systems. Because of this, many teams start down the path of building their own bespoke systems, and typically become overwhelmed rather quickly with all of the complexities that play into design decisions and implementation.

As an Edge as a Service provider, CloudFlow is often pulled into projects during the early stages of research and discovery, where we’re able to offload the build and management of many, if not most, of the critical components, ultimately accelerating the path to edge for organizations across a diverse range of use cases.